Java并发编程是现代高并发系统设计的核心技术,JUC(java.util.concurrent)框架提供了完整的并发解决方案。本文从底层实现原理出发,深入分析线程池工作机制、锁优化策略、并发集合数据结构,并结合实际业务场景提供完整的性能优化方案和最佳实践。

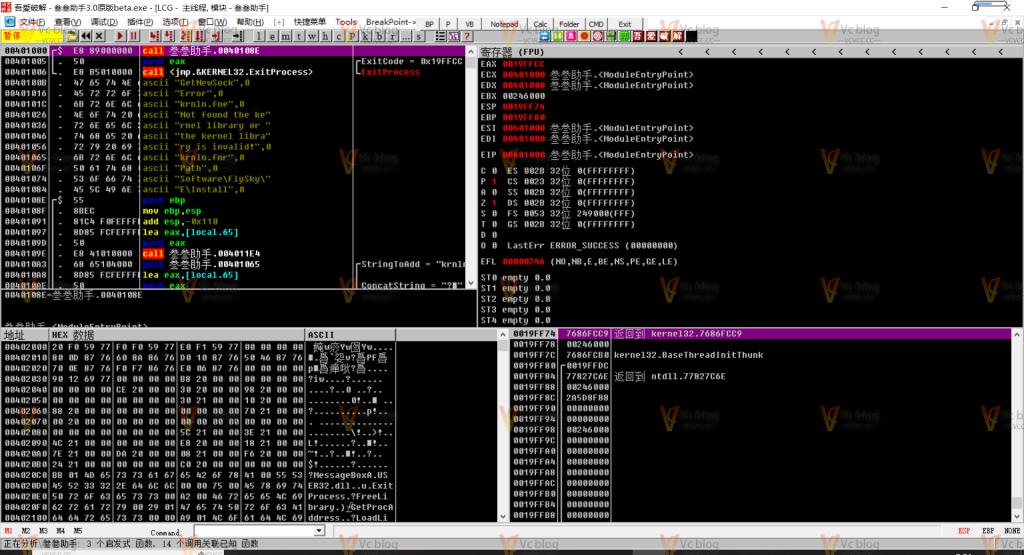

![图片[1]-Java并发编程深度解析:JUC高并发框架原理与实战最佳实践](https://blogimg.vcvcc.cc/2025/11/20251114112719536-1024x768.png?imageView2/0/format/webp/q/75)

一、JUC框架架构深度剖析

1. JUC包层次结构与设计哲学

/**

* JUC框架核心组件分析

*/

public class JUCArchitectureAnalysis {

/**

* JUC包核心组件分类:

* 1. 原子操作类 (Atomic) - 无锁编程基础

* 2. 锁框架 (Locks) - AQS抽象队列同步器

* 3. 并发集合 (Collections) - CAS优化数据结构

* 4. 线程池 (Executor) - 线程生命周期管理

* 5. 同步工具 (Tools) - CountDownLatch/CyclicBarrier等

*/

// AQS(AbstractQueuedSynchronizer)核心原理演示

static class CustomLock extends AbstractQueuedSynchronizer {

private static final long serialVersionUID = 1L;

// 尝试获取锁

@Override

protected boolean tryAcquire(int arg) {

// CAS操作:期望0,更新为1

return compareAndSetState(0, 1);

}

// 尝试释放锁

@Override

protected boolean tryRelease(int arg) {

setState(0); // 不需要CAS,因为只有持有锁的线程能释放

return true;

}

// 是否独占模式

@Override

protected boolean isHeldExclusively() {

return getState() == 1;

}

public void lock() {

acquire(1);

}

public void unlock() {

release(1);

}

}

/**

* AQS队列状态分析工具

*/

public static void analyzeAQSState(AbstractQueuedSynchronizer aqs) {

System.out.println("AQS状态分析:");

System.out.println("State: " + aqs.getState());

// 通过反射获取内部队列信息(实际生产环境慎用)

try {

Field headField = AbstractQueuedSynchronizer.class.getDeclaredField("head");

Field tailField = AbstractQueuedSynchronizer.class.getDeclaredField("tail");

headField.setAccessible(true);

tailField.setAccessible(true);

Object head = headField.get(aqs);

Object tail = tailField.get(aqs);

System.out.println("等待队列头: " + head);

System.out.println("等待队列尾: " + tail);

} catch (Exception e) {

// 忽略反射异常

}

}

}

/**

* 内存屏障与可见性验证

*/

public class MemoryBarrierDemo {

private volatile boolean flag = false;

private int nonVolatileValue = 0;

public void writer() {

nonVolatileValue = 42; // 普通写

flag = true; // volatile写,建立happens-before关系

}

public void reader() {

if (flag) { // volatile读,能看到之前的所有写操作

// 由于happens-before,这里一定能看到nonVolatileValue = 42

System.out.println("Non-volatile value: " + nonVolatileValue);

}

}

/**

* 验证指令重排序

*/

private static int x = 0, y = 0;

private static int a = 0, b = 0;

public static void testReorder() throws InterruptedException {

for (int i = 0; i < 100000; i++) {

x = y = a = b = 0;

Thread t1 = new Thread(() -> {

a = 1; // 操作1

x = b; // 操作2

});

Thread t2 = new Thread(() -> {

b = 1; // 操作3

y = a; // 操作4

});

t1.start();

t2.start();

t1.join();

t2.join();

// 如果发生指令重排序,可能出现 x=0,y=0

if (x == 0 && y == 0) {

System.out.println("检测到指令重排序! i=" + i);

break;

}

}

}

}二、线程池深度优化与监控

1. 线程池核心参数动态调优

/**

* 动态可配置线程池

*/

public class DynamicThreadPoolExecutor extends ThreadPoolExecutor {

private final String poolName;

private final ThreadPoolMetrics metrics;

// 监控指标

private final AtomicLong totalTasks = new AtomicLong();

private final AtomicLong completedTasks = new AtomicLong();

private final AtomicLong rejectedTasks = new AtomicLong();

private final LongAdder activeThreads = new LongAdder();

private final Histogram taskDurationHistogram;

public DynamicThreadPoolExecutor(String poolName,

int corePoolSize,

int maximumPoolSize,

long keepAliveTime,

TimeUnit unit,

BlockingQueue<Runnable> workQueue,

ThreadFactory threadFactory,

RejectedExecutionHandler handler) {

super(corePoolSize, maximumPoolSize, keepAliveTime, unit, workQueue, threadFactory, handler);

this.poolName = poolName;

this.metrics = new ThreadPoolMetrics(poolName);

this.taskDurationHistogram = new Histogram(TimeUnit.SECONDS.toMillis(10));

}

@Override

public void execute(Runnable command) {

totalTasks.incrementAndGet();

long startTime = System.currentTimeMillis();

super.execute(() -> {

activeThreads.increment();

try {

command.run();

completedTasks.incrementAndGet();

} finally {

activeThreads.decrement();

long duration = System.currentTimeMillis() - startTime;

taskDurationHistogram.recordValue(duration);

metrics.recordTaskDuration(duration);

}

});

}

@Override

protected void beforeExecute(Thread t, Runnable r) {

super.beforeExecute(t, r);

metrics.recordThreadStart();

}

@Override

protected void afterExecute(Runnable r, Throwable t) {

super.afterExecute(r, t);

metrics.recordThreadFinish();

if (t != null) {

metrics.recordTaskError();

}

}

@Override

protected void terminated() {

super.terminated();

metrics.recordPoolShutdown();

}

/**

* 动态调整核心参数

*/

public void dynamicAdjust(int newCorePoolSize, int newMaximumPoolSize) {

if (newCorePoolSize > 0) {

setCorePoolSize(newCorePoolSize);

}

if (newMaximumPoolSize >= newCorePoolSize) {

setMaximumPoolSize(newMaximumPoolSize);

}

}

/**

* 获取线程池状态快照

*/

public ThreadPoolSnapshot getSnapshot() {

return new ThreadPoolSnapshot(

poolName,

getPoolSize(),

getActiveCount(),

getCorePoolSize(),

getMaximumPoolSize(),

getQueue().size(),

getQueue().remainingCapacity(),

getCompletedTaskCount(),

totalTasks.get(),

rejectedTasks.get(),

getLargestPoolSize(),

getTaskCount()

);

}

/**

* 基于负载的动态调整策略

*/

public void autoScaleBasedOnLoad() {

ThreadPoolSnapshot snapshot = getSnapshot();

double loadFactor = calculateLoadFactor(snapshot);

if (loadFactor > 0.8) {

// 高负载,扩容

int newCoreSize = Math.min(

getCorePoolSize() * 2,

getMaximumPoolSize()

);

dynamicAdjust(newCoreSize, getMaximumPoolSize());

metrics.recordAutoScaleUp();

} else if (loadFactor < 0.3 && getCorePoolSize() > 1) {

// 低负载,缩容

int newCoreSize = Math.max(1, getCorePoolSize() / 2);

dynamicAdjust(newCoreSize, getMaximumPoolSize());

metrics.recordAutoScaleDown();

}

}

private double calculateLoadFactor(ThreadPoolSnapshot snapshot) {

int totalCapacity = snapshot.getCorePoolSize() + snapshot.getQueueRemainingCapacity();

if (totalCapacity == 0) return 1.0;

int currentLoad = snapshot.getActiveCount() + snapshot.getQueueSize();

return (double) currentLoad / totalCapacity;

}

}

/**

* 线程池状态快照

*/

class ThreadPoolSnapshot {

private final String poolName;

private final int poolSize;

private final int activeCount;

private final int corePoolSize;

private final int maximumPoolSize;

private final int queueSize;

private final int queueRemainingCapacity;

private final long completedTaskCount;

private final long totalTasks;

private final long rejectedTasks;

private final int largestPoolSize;

private final long taskCount;

// 构造函数、getter方法...

@Override

public String toString() {

return String.format(

"ThreadPool[%s]: active=%d, pool=%d, queue=%d/%d, completed=%d, rejected=%d",

poolName, activeCount, poolSize, queueSize,

queueSize + queueRemainingCapacity, completedTaskCount, rejectedTasks

);

}

}

/**

* 线程池监控指标收集

*/

class ThreadPoolMetrics {

private final String poolName;

private final Meter taskRate;

private final Timer taskDuration;

private final Counter activeThreads;

private final Counter rejectedTasks;

private final Counter autoScaleEvents;

public ThreadPoolMetrics(String poolName) {

this.poolName = poolName;

// 初始化监控指标(这里使用简化的实现)

this.taskRate = new Meter();

this.taskDuration = new Timer();

this.activeThreads = new Counter();

this.rejectedTasks = new Counter();

this.autoScaleEvents = new Counter();

}

public void recordTaskDuration(long durationMs) {

taskDuration.record(durationMs, TimeUnit.MILLISECONDS);

}

public void recordThreadStart() {

activeThreads.increment();

}

public void recordThreadFinish() {

activeThreads.decrement();

}

public void recordTaskError() {

// 错误统计

}

public void recordPoolShutdown() {

// 关闭事件记录

}

public void recordAutoScaleUp() {

autoScaleEvents.increment();

}

public void recordAutoScaleDown() {

autoScaleEvents.increment();

}

}2. 线程池任务执行链路追踪

/**

* 支持链路追踪的线程池

*/

public class TraceableThreadPoolExecutor extends ThreadPoolExecutor {

private static final ThreadLocal<Map<String, String>> traceContext = new ThreadLocal<>();

public TraceableThreadPoolExecutor(int corePoolSize, int maximumPoolSize,

long keepAliveTime, TimeUnit unit,

BlockingQueue<Runnable> workQueue,

ThreadFactory threadFactory,

RejectedExecutionHandler handler) {

super(corePoolSize, maximumPoolSize, keepAliveTime, unit, workQueue, threadFactory, handler);

}

/**

* 包装Runnable,传递trace上下文

*/

@Override

public void execute(Runnable command) {

// 捕获调用线程的trace上下文

Map<String, String> currentTraceContext = captureTraceContext();

Runnable tracedTask = () -> {

try {

// 在任务执行线程中设置trace上下文

if (currentTraceContext != null) {

traceContext.set(currentTraceContext);

}

command.run();

} finally {

// 清理threadlocal

traceContext.remove();

}

};

super.execute(tracedTask);

}

/**

* 支持Callable的提交方法

*/

@Override

public <T> Future<T> submit(Callable<T> task) {

Map<String, String> currentTraceContext = captureTraceContext();

Callable<T> tracedTask = () -> {

try {

if (currentTraceContext != null) {

traceContext.set(currentTraceContext);

}

return task.call();

} finally {

traceContext.remove();

}

};

return super.submit(tracedTask);

}

private Map<String, String> captureTraceContext() {

// 从当前线程捕获trace信息

Map<String, String> context = new HashMap<>();

context.put("traceId", generateTraceId());

context.put("spanId", generateSpanId());

context.put("thread", Thread.currentThread().getName());

context.put("timestamp", String.valueOf(System.currentTimeMillis()));

return context;

}

private String generateTraceId() {

return UUID.randomUUID().toString().replace("-", "").substring(0, 16);

}

private String generateSpanId() {

return Long.toHexString(ThreadLocalRandom.current().nextLong());

}

/**

* 获取当前任务的trace上下文

*/

public static Map<String, String> getCurrentTraceContext() {

return traceContext.get();

}

/**

* 创建带trace信息的任务包装器

*/

public static Runnable wrapWithTrace(Runnable task, Map<String, String> context) {

return () -> {

try {

traceContext.set(context);

task.run();

} finally {

traceContext.remove();

}

};

}

}

/**

* 线程池任务执行监控

*/

class ThreadPoolTaskMonitor {

private final Map<String, TaskExecutionStats> taskStats = new ConcurrentHashMap<>();

private final ScheduledExecutorService monitorExecutor =

Executors.newSingleThreadScheduledExecutor();

public void startMonitoring(ThreadPoolExecutor executor, String poolName) {

monitorExecutor.scheduleAtFixedRate(() -> {

ThreadPoolSnapshot snapshot = ((DynamicThreadPoolExecutor) executor).getSnapshot();

recordStats(poolName, snapshot);

// 检查异常情况

checkForAnomalies(snapshot);

}, 0, 5, TimeUnit.SECONDS);

}

private void recordStats(String poolName, ThreadPoolSnapshot snapshot) {

TaskExecutionStats stats = taskStats.computeIfAbsent(poolName,

k -> new TaskExecutionStats());

stats.recordSnapshot(snapshot);

}

private void checkForAnomalies(ThreadPoolSnapshot snapshot) {

// 检查线程池死锁

if (snapshot.getActiveCount() == snapshot.getPoolSize() &&

snapshot.getQueueSize() > 0 &&

snapshot.getCompletedTaskCount() == 0) {

System.err.println("可能的线程池死锁: " + snapshot.getPoolName());

}

// 检查任务拒绝率

double rejectionRate = (double) snapshot.getRejectedTasks() /

Math.max(1, snapshot.getTotalTasks());

if (rejectionRate > 0.1) {

System.err.println("高任务拒绝率: " + rejectionRate);

}

}

public void generateReport() {

System.out.println("=== 线程池监控报告 ===");

taskStats.forEach((poolName, stats) -> {

System.out.println("线程池: " + poolName);

System.out.println(stats.generateReport());

System.out.println("-------------------");

});

}

}

class TaskExecutionStats {

private final List<ThreadPoolSnapshot> snapshots = new ArrayList<>();

private long maxQueueSize = 0;

private long maxActiveThreads = 0;

public void recordSnapshot(ThreadPoolSnapshot snapshot) {

snapshots.add(snapshot);

maxQueueSize = Math.max(maxQueueSize, snapshot.getQueueSize());

maxActiveThreads = Math.max(maxActiveThreads, snapshot.getActiveCount());

}

public String generateReport() {

if (snapshots.isEmpty()) return "无数据";

ThreadPoolSnapshot latest = snapshots.get(snapshots.size() - 1);

return String.format(

"最大队列深度: %d, 最大活跃线程: %d, 总完成任务: %d, 拒绝任务: %d",

maxQueueSize, maxActiveThreads, latest.getCompletedTaskCount(),

latest.getRejectedTasks()

);

}

}三、锁机制性能优化与死锁预防

1. 锁粒度优化与分段锁实战

/**

* 高性能分段锁Map实现

*/

public class SegmentLockMap<K, V> {

// 分段数量 - 通常为2的幂次方

private static final int DEFAULT_CONCURRENCY_LEVEL = 16;

private final int segmentMask;

private final int segmentShift;

private final Segment<K, V>[] segments;

/**

* 分段内部类

*/

static final class Segment<K, V> extends ReentrantLock {

private volatile HashEntry<K, V>[] table;

private volatile int count;

private transient int modCount;

// 分段内的哈希表操作

V get(K key, int hash) {

if (count != 0) { // 读volatile变量

HashEntry<K, V> e = getFirst(hash);

while (e != null) {

if (e.hash == hash && key.equals(e.key)) {

V v = e.value;

if (v != null) { // 检查值是否被清除

return v;

}

// 值被清除,重新读取

return readValueUnderLock(e);

}

e = e.next;

}

}

return null;

}

V put(K key, int hash, V value, boolean onlyIfAbsent) {

lock();

try {

// 分段内的put实现...

return null;

} finally {

unlock();

}

}

private HashEntry<K, V> getFirst(int hash) {

HashEntry<K, V>[] tab = table;

return tab[hash & (tab.length - 1)];

}

private V readValueUnderLock(HashEntry<K, V> e) {

lock();

try {

return e.value;

} finally {

unlock();

}

}

}

/**

* 哈希条目

*/

static final class HashEntry<K, V> {

final K key;

final int hash;

volatile V value;

final HashEntry<K, V> next;

HashEntry(K key, int hash, HashEntry<K, V> next, V value) {

this.key = key;

this.hash = hash;

this.next = next;

this.value = value;

}

}

public SegmentLockMap(int initialCapacity, float loadFactor, int concurrencyLevel) {

// 确保分段数量为2的幂次方

int sshift = 0;

int ssize = 1;

while (ssize < concurrencyLevel) {

++sshift;

ssize <<= 1;

}

segmentShift = 32 - sshift;

segmentMask = ssize - 1;

// 创建分段数组

this.segments = new Segment[ssize];

for (int i = 0; i < this.segments.length; ++i) {

this.segments[i] = new Segment<>();

}

}

/**

* 根据key的hash选择分段

*/

private Segment<K, V> segmentFor(int hash) {

return segments[(hash >>> segmentShift) & segmentMask];

}

public V get(K key) {

int hash = hash(key.hashCode());

return segmentFor(hash).get(key, hash);

}

public V put(K key, V value) {

int hash = hash(key.hashCode());

return segmentFor(hash).put(key, hash, value, false);

}

/**

* 哈希函数 - 减少碰撞

*/

private static int hash(int h) {

h ^= (h >>> 20) ^ (h >>> 12);

return h ^ (h >>> 7) ^ (h >>> 4);

}

}

/**

* 读写锁优化 - 锁降级示例

*/

public class ReadWriteLockCache<K, V> {

private final Map<K, V> cache = new HashMap<>();

private final ReadWriteLock rwLock = new ReentrantReadWriteLock();

private final Lock readLock = rwLock.readLock();

private final Lock writeLock = rwLock.writeLock();

/**

* 锁降级:写锁 -> 读锁

* 保证数据一致性同时提高读性能

*/

public V getWithLockDowngrade(K key) {

V value;

// 首先尝试读锁

readLock.lock();

try {

value = cache.get(key);

if (value != null) {

return value;

}

} finally {

readLock.unlock();

}

// 缓存未命中,获取写锁

writeLock.lock();

try {

// 双重检查,防止其他线程已经加载

value = cache.get(key);

if (value == null) {

// 模拟从数据库加载

value = loadFromDatabase(key);

cache.put(key, value);

}

// 锁降级:在写锁内部获取读锁

readLock.lock();

} finally {

writeLock.unlock(); // 释放写锁,但仍然持有读锁

}

try {

// 这里仍然持有读锁,可以安全地使用value

processValue(value);

return value;

} finally {

readLock.unlock();

}

}

/**

* 批量更新优化 - 减少锁粒度

*/

public void batchUpdate(Map<K, V> updates) {

if (updates.isEmpty()) return;

// 对更新进行分组,减少锁竞争

Map<Object, Map<K, V>> segmentedUpdates = segmentUpdates(updates);

for (Map<K, V> segment : segmentedUpdates.values()) {

writeLock.lock();

try {

cache.putAll(segment);

} finally {

writeLock.unlock();

}

}

}

private Map<Object, Map<K, V>> segmentUpdates(Map<K, V> updates) {

// 简化的分段逻辑,实际可根据key的hash进行分段

Map<Object, Map<K, V>> segments = new HashMap<>();

Map<K, V> segment = new HashMap<>(updates);

segments.put("segment1", segment);

return segments;

}

private V loadFromDatabase(K key) {

// 模拟数据库加载

try {

Thread.sleep(10);

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

}

return (V) ("value_" + key);

}

private void processValue(V value) {

// 处理值的逻辑

}

}2. 死锁检测与预防机制

/**

* 死锁检测与预防工具

*/

public class DeadlockDetector {

private final ScheduledExecutorService scheduler =

Executors.newSingleThreadScheduledExecutor();

private final Set<Thread> monitoredThreads = ConcurrentHashMap.newKeySet();

private final Map<Thread, LockInfo> threadLockInfo = new ConcurrentHashMap<>();

/**

* 可检测死锁的锁

*/

public static class DetectableReentrantLock extends ReentrantLock {

private final String lockName;

private final ThreadLocal<Long> lockAcquireTime = new ThreadLocal<>();

public DetectableReentrantLock(String lockName) {

this.lockName = lockName;

}

@Override

public void lock() {

long startTime = System.currentTimeMillis();

super.lock();

long acquireTime = System.currentTimeMillis() - startTime;

if (acquireTime > 1000) { // 获取锁超过1秒,记录警告

System.err.println("锁获取缓慢: " + lockName + ", 耗时: " + acquireTime + "ms");

}

lockAcquireTime.set(System.currentTimeMillis());

recordLockAcquisition();

}

@Override

public void unlock() {

Long acquireTime = lockAcquireTime.get();

if (acquireTime != null) {

long holdTime = System.currentTimeMillis() - acquireTime;

if (holdTime > 5000) { // 持有锁超过5秒,记录警告

System.err.println("锁持有时间过长: " + lockName + ", 持有: " + holdTime + "ms");

}

lockAcquireTime.remove();

}

super.unlock();

}

private void recordLockAcquisition() {

Thread currentThread = Thread.currentThread();

DeadlockDetector.getInstance().recordLockAcquire(currentThread, this);

}

}

/**

* 锁信息记录

*/

static class LockInfo {

private final Thread thread;

private final DetectableReentrantLock lock;

private final long acquireTime;

private final StackTraceElement[] stackTrace;

public LockInfo(Thread thread, DetectableReentrantLock lock) {

this.thread = thread;

this.lock = lock;

this.acquireTime = System.currentTimeMillis();

this.stackTrace = Thread.currentThread().getStackTrace();

}

}

private static final DeadlockDetector INSTANCE = new DeadlockDetector();

private DeadlockDetector() {

// 启动死锁检测

scheduler.scheduleAtFixedRate(this::detectDeadlocks, 5, 5, TimeUnit.SECONDS);

}

public static DeadlockDetector getInstance() {

return INSTANCE;

}

public void recordLockAcquire(Thread thread, DetectableReentrantLock lock) {

threadLockInfo.put(thread, new LockInfo(thread, lock));

monitoredThreads.add(thread);

}

public void recordLockRelease(Thread thread) {

threadLockInfo.remove(thread);

}

/**

* 死锁检测算法

*/

private void detectDeadlocks() {

Map<Thread, LockInfo> currentLocks = new HashMap<>(threadLockInfo);

// 检查锁等待关系

for (LockInfo info : currentLocks.values()) {

if (isThreadBlocked(info.thread)) {

analyzePotentialDeadlock(info.thread, currentLocks);

}

}

// 检查锁持有时间

checkLockHoldTime(currentLocks);

}

private boolean isThreadBlocked(Thread thread) {

return thread.getState() == Thread.State.BLOCKED;

}

private void analyzePotentialDeadlock(Thread blockedThread, Map<Thread, LockInfo> locks) {

// 构建锁等待图

Map<DetectableReentrantLock, Thread> lockOwners = new HashMap<>();

Map<Thread, DetectableReentrantLock> waitingLocks = new HashMap<>();

for (LockInfo info : locks.values()) {

lockOwners.put(info.lock, info.thread);

}

// 查找等待关系

for (LockInfo info : locks.values()) {

if (info.thread.getState() == Thread.State.BLOCKED) {

// 这里简化处理,实际需要获取线程等待的锁

waitingLocks.put(info.thread, info.lock);

}

}

// 检测循环等待

if (hasCycle(waitingLocks, lockOwners)) {

System.err.println("检测到可能的死锁!");

dumpDeadlockInfo(waitingLocks, lockOwners);

}

}

private boolean hasCycle(Map<Thread, DetectableReentrantLock> waitingLocks,

Map<DetectableReentrantLock, Thread> lockOwners) {

// 简化的环检测算法

for (Map.Entry<Thread, DetectableReentrantLock> entry : waitingLocks.entrySet()) {

Thread waiter = entry.getKey();

DetectableReentrantLock waitingLock = entry.getValue();

Thread owner = lockOwners.get(waitingLock);

if (owner != null && waitingLocks.containsKey(owner)) {

DetectableReentrantLock ownerWaitingLock = waitingLocks.get(owner);

if (lockOwners.get(ownerWaitingLock) == waiter) {

return true; // 发现循环等待

}

}

}

return false;

}

private void checkLockHoldTime(Map<Thread, LockInfo> locks) {

long currentTime = System.currentTimeMillis();

for (LockInfo info : locks.values()) {

long holdTime = currentTime - info.acquireTime;

if (holdTime > 30000) { // 30秒

System.err.println("锁持有时间超长警告:");

System.err.println("线程: " + info.thread.getName());

System.err.println("锁: " + info.lock.lockName);

System.err.println("持有时间: " + holdTime + "ms");

// 打印堆栈信息

for (StackTraceElement element : info.stackTrace) {

System.err.println(" " + element);

}

}

}

}

private void dumpDeadlockInfo(Map<Thread, DetectableReentrantLock> waitingLocks,

Map<DetectableReentrantLock, Thread> lockOwners) {

System.err.println("=== 死锁信息 ===");

for (Map.Entry<Thread, DetectableReentrantLock> entry : waitingLocks.entrySet()) {

System.err.println("线程 " + entry.getKey().getName() +

" 等待锁 " + entry.getValue().lockName);

}

}

}

/**

* 锁顺序化 - 死锁预防

*/

public class LockOrdering {

/**

* 基于资源ID的锁顺序化

*/

public static class OrderedLockManager {

private final ConcurrentHashMap<Object, Object> locks = new ConcurrentHashMap<>();

public void withOrderedLocks(Object resource1, Object resource2, Runnable task) {

// 确保锁的顺序一致性

Object firstLock, secondLock;

int hash1 = System.identityHashCode(resource1);

int hash2 = System.identityHashCode(resource2);

if (hash1 < hash2) {

firstLock = resource1;

secondLock = resource2;

} else if (hash1 > hash2) {

firstLock = resource2;

secondLock = resource1;

} else {

// hash冲突,使用tie-breaker

firstLock = getTieLock(resource1, resource2);

secondLock = (firstLock == resource1) ? resource2 : resource1;

}

synchronized (firstLock) {

synchronized (secondLock) {

task.run();

}

}

}

private Object getTieLock(Object resource1, Object resource2) {

// 使用其他机制打破平局

return locks.computeIfAbsent(resource1, k -> new Object());

}

}

/**

* 尝试锁 - 避免死锁

*/

public static class TryLockManager {

private final Lock lock1 = new ReentrantLock();

private final Lock lock2 = new ReentrantLock();

public boolean tryBothLocks(long timeout, TimeUnit unit) throws InterruptedException {

long stopTime = System.nanoTime() + unit.toNanos(timeout);

while (true) {

if (lock1.tryLock()) {

try {

if (lock2.tryLock(100, TimeUnit.MILLISECONDS)) {

return true; // 成功获取两个锁

}

} finally {

lock1.unlock();

}

}

if (System.nanoTime() > stopTime) {

return false;

}

// 随机退避

Thread.sleep(ThreadLocalRandom.current().nextInt(50, 100));

}

}

}

}四、并发集合底层原理与性能对比

1. ConcurrentHashMap源码级分析

/**

* ConcurrentHashMap实现原理分析

*/

public class ConcurrentHashMapAnalysis {

/**

* JDK 8 ConcurrentHashMap核心改进:

* 1. 取消分段锁,使用Node数组 + synchronized + CAS

* 2. 树化优化:链表转红黑树

* 3. 扩容协助机制

*/

// 模拟ConcurrentHashMap的putVal方法核心逻辑

public static class ConcurrentHashMapSimulator {

transient volatile Node[] table;

final V putVal(K key, V value, boolean onlyIfAbsent) {

if (key == null || value == null) throw new NullPointerException();

int hash = spread(key.hashCode());

int binCount = 0;

for (Node<K,V>[] tab = table;;) {

Node<K,V> f; int n, i, fh;

if (tab == null || (n = tab.length) == 0) {

tab = initTable(); // 延迟初始化

} else if ((f = tabAt(tab, i = (n - 1) & hash)) == null) {

// CAS插入新节点

if (casTabAt(tab, i, null, new Node<K,V>(hash, key, value, null))) {

break;

}

} else if ((fh = f.hash) == MOVED) {

tab = helpTransfer(tab, f); // 协助扩容

} else {

V oldVal = null;

synchronized (f) { // 对链表头加锁

if (tabAt(tab, i) == f) {

if (fh >= 0) { // 链表节点

binCount = 1;

for (Node<K,V> e = f;; ++binCount) {

K ek;

if (e.hash == hash && ((ek = e.key) == key || (ek != null && key.equals(ek)))) {

oldVal = e.val;

if (!onlyIfAbsent) {

e.val = value;

}

break;

}

Node<K,V> pred = e;

if ((e = e.next) == null) {

pred.next = new Node<K,V>(hash, key, value, null);

break;

}

}

} else if (f instanceof TreeBin) { // 树节点

// 红黑树处理...

}

}

}

if (binCount != 0) {

if (binCount >= TREEIFY_THRESHOLD) {

treeifyBin(tab, i); // 链表转树

}

if (oldVal != null) {

return oldVal;

}

break;

}

}

}

addCount(1L, binCount); // 计数增加,可能触发扩容

return null;

}

// 原子操作:获取数组元素

static final <K,V> Node<K,V> tabAt(Node<K,V>[] tab, int i) {

return (Node<K,V>)U.getObjectVolatile(tab, ((long)i << ASHIFT) + ABASE);

}

// CAS操作:设置数组元素

static final <K,V> boolean casTabAt(Node<K,V>[] tab, int i, Node<K,V> c, Node<K,V> v) {

return U.compareAndSwapObject(tab, ((long)i << ASHIFT) + ABASE, c, v);

}

}

/**

* 并发集合性能对比测试

*/

public static class ConcurrentCollectionBenchmark {

private static final int THREAD_COUNT = 8;

private static final int OPERATION_COUNT = 100000;

public void benchmark() throws InterruptedException {

// HashMap vs ConcurrentHashMap

testMapPerformance(new HashMap<>(), "HashMap");

testMapPerformance(new ConcurrentHashMap<>(), "ConcurrentHashMap");

testMapPerformance(Collections.synchronizedMap(new HashMap<>()), "SynchronizedMap");

// ArrayList vs CopyOnWriteArrayList

testListPerformance(new ArrayList<>(), "ArrayList");

testListPerformance(new CopyOnWriteArrayList<>(), "CopyOnWriteArrayList");

testListPerformance(Collections.synchronizedList(new ArrayList<>()), "SynchronizedList");

}

private void testMapPerformance(Map<String, Integer> map, String mapName)

throws InterruptedException {

long startTime = System.currentTimeMillis();

CountDownLatch latch = new CountDownLatch(THREAD_COUNT);

AtomicLong totalOps = new AtomicLong();

for (int i = 0; i < THREAD_COUNT; i++) {

final int threadId = i;

new Thread(() -> {

try {

Random random = new Random();

for (int j = 0; j < OPERATION_COUNT; j++) {

String key = "key_" + threadId + "_" + j;

// 混合操作:80%读,15%写,5%删除

double op = random.nextDouble();

if (op < 0.8) {

map.get(key);

} else if (op < 0.95) {

map.put(key, j);

} else {

map.remove(key);

}

totalOps.incrementAndGet();

}

} finally {

latch.countDown();

}

}).start();

}

latch.await();

long duration = System.currentTimeMillis() - startTime;

double throughput = (double) totalOps.get() / duration * 1000;

System.out.printf("%s: 耗时=%dms, 吞吐量=%.2f ops/s%n",

mapName, duration, throughput);

}

private void testListPerformance(List<String> list, String listName)

throws InterruptedException {

// 类似的性能测试逻辑...

}

}

}

/**

* 无锁并发队列性能分析

*/

public class LockFreeQueueAnalysis {

/**

* 基于CAS的无锁队列实现

*/

public static class LockFreeQueue<E> {

private static class Node<E> {

volatile E item;

volatile Node<E> next;

Node(E item) {

this.item = item;

}

}

private volatile Node<E> head;

private volatile Node<E> tail;

public LockFreeQueue() {

Node<E> dummy = new Node<>(null);

head = dummy;

tail = dummy;

}

public boolean offer(E item) {

Node<E> newNode = new Node<>(item);

while (true) {

Node<E> currentTail = tail;

Node<E> tailNext = currentTail.next;

if (currentTail == tail) { // 检查一致性

if (tailNext == null) {

// CAS更新tail的next指针

if (compareAndSetNext(currentTail, null, newNode)) {

// 更新tail指针(允许失败)

compareAndSetTail(currentTail, newNode);

return true;

}

} else {

// 协助其他线程完成tail更新

compareAndSetTail(currentTail, tailNext);

}

}

}

}

public E poll() {

while (true) {

Node<E> currentHead = head;

Node<E> currentTail = tail;

Node<E> first = currentHead.next;

if (currentHead == head) { // 检查一致性

if (currentHead == currentTail) {

if (first == null) {

return null; // 队列为空

}

// 其他线程正在添加元素,协助更新tail

compareAndSetTail(currentTail, first);

} else {

E item = first.item;

if (item != null && compareAndSetHead(currentHead, first)) {

first.item = null; // 帮助GC

return item;

}

}

}

}

}

// CAS操作模拟

private boolean compareAndSetTail(Node<E> expect, Node<E> update) {

// 模拟CAS操作

return true;

}

private boolean compareAndSetHead(Node<E> expect, Node<E> update) {

// 模拟CAS操作

return true;

}

private boolean compareAndSetNext(Node<E> node, Node<E> expect, Node<E> update) {

// 模拟CAS操作

return true;

}

}

/**

* 不同队列实现性能对比

*/

public static void queuePerformanceComparison() throws InterruptedException {

int producerCount = 4;

int consumerCount = 4;

int messagesPerProducer = 100000;

// 测试不同队列实现

testQueuePerformance(new ConcurrentLinkedQueue<>(),

"ConcurrentLinkedQueue", producerCount, consumerCount, messagesPerProducer);

testQueuePerformance(new LinkedBlockingQueue<>(),

"LinkedBlockingQueue", producerCount, consumerCount, messagesPerProducer);

testQueuePerformance(new ArrayBlockingQueue<>(100000),

"ArrayBlockingQueue", producerCount, consumerCount, messagesPerProducer);

}

private static void testQueuePerformance(Queue<Integer> queue, String queueName,

int producerCount, int consumerCount,

int messagesPerProducer) throws InterruptedException {

long startTime = System.currentTimeMillis();

CountDownLatch producerLatch = new CountDownLatch(producerCount);

CountDownLatch consumerLatch = new CountDownLatch(consumerCount);

AtomicInteger consumedCount = new AtomicInteger();

// 生产者线程

for (int i = 0; i < producerCount; i++) {

final int producerId = i;

new Thread(() -> {

try {

for (int j = 0; j < messagesPerProducer; j++) {

queue.offer(producerId * messagesPerProducer + j);

}

} finally {

producerLatch.countDown();

}

}).start();

}

// 消费者线程

for (int i = 0; i < consumerCount; i++) {

new Thread(() -> {

try {

int count = 0;

while (count < messagesPerProducer * producerCount / consumerCount) {

Integer item = queue.poll();

if (item != null) {

consumedCount.incrementAndGet();

count++;

}

}

} finally {

consumerLatch.countDown();

}

}).start();

}

producerLatch.await();

consumerLatch.await();

long duration = System.currentTimeMillis() - startTime;

System.out.printf("%s: 耗时=%dms, 生产=%d, 消费=%d%n",

queueName, duration, producerCount * messagesPerProducer, consumedCount.get());

}

}五、总结与最佳实践

通过本文的深度分析,我们可以得出Java并发编程的关键要点:

1. 性能优化核心原则

- 锁粒度优化:尽可能减小锁的范围和时间

- 无锁编程:在合适场景使用CAS和原子操作

- 资源池化:合理使用线程池和连接池

- 异步处理:使用CompletableFuture等异步编程模型

2. 并发设计模式

- 生产者-消费者:使用BlockingQueue解耦

- 读写锁分离:读多写少场景使用ReadWriteLock

- 副本写入:CopyOnWriteArrayList适用于读多写极少场景

- 分治策略:将大任务拆分为小任务并行处理

3. 监控与调试

- 线程池监控:实时监控队列深度、活跃线程等指标

- 死锁检测:定期检查锁等待关系

- 性能剖析:使用JMH进行微基准测试

- 日志追踪:完善的日志记录和链路追踪

4. 常见陷阱避免

- 线程泄漏:确保线程池正确关闭

- 资源竞争:避免共享资源的无序访问

- 上下文切换:控制线程数量,避免过度切换

- 内存可见性:正确使用volatile和内存屏障

通过深入理解JUC框架的底层原理,结合实际业务场景的优化实践,开发者可以构建出高性能、高可用的并发系统。持续的性能测试、监控和调优是保证系统稳定运行的关键。

© 版权声明

THE END

暂无评论内容